Paging

Contents

22. Paging#

22.1. Paged Address Translation#

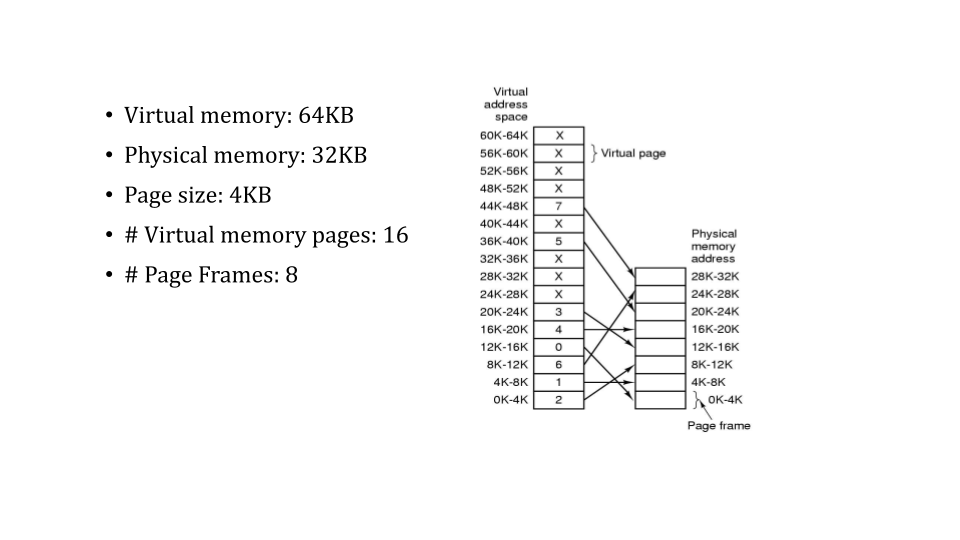

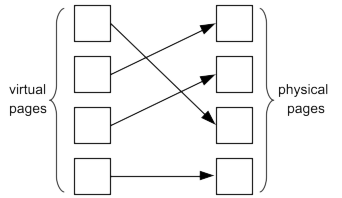

Most systems today use paged address translation or paging. A paged virtual memory system divides both virtual memory and physical memory into fixed size chunks or pieces. They are known as virtual pages or just pages in the virtual address space and page frames in physical memory. Each page of virtual memory that is being used maps to a page frame.

Fig. 22.1 Paged virtual memory example#

One benefit of paging over segmentation is that the virtual address space can be much larger than physical memory. In the segmentation memory model its not possible to run a program that’s requires more memory than the system has. This means a program may run on a system with a large amount of memory and not be able to run on a system with a smaller amount of memory. On a paged memory system every process has exactly the same size virtual address space typically gigabytes, terabytes or even petabytes on modern systems but only uses a small portion in most cases. The physical memory is typically much smaller than a single virtual address space, megabytes or gigabytes, and there can be hundreds or even thousands of processes running on a system. As a process runs on a paged memory system only the virtual pages needed at any moment are required to be in physical memory which is a small subset of the entire amount of physical memory. The memory management system takes care of insuring that the necessary virtual to physical mappings are in place. A large program running on a small memory system just runs slower rather than not at all on a paged memory system.

Another benefit of paging over segmentation is that a region of virtual memory or memory segment does not need to map physically contiguous memory. Since the virtual address space is divided into small fixed size pages a virtually contiguous region of memory can map to page frames that are randomly scattered throughout the entire physical memory. This completely eliminates external fragmentation and therefore the need to perform compaction. The only fragmentation issue encountered in a page virtual memory system is internal fragmentation of the unused portion of a page at the end of a region of virtual memory.

22.2. MMU Memory Management Unit#

On paged systems the CPU includes special hardware that maintains the virtual to physical page mappings known as the MMU or Memory Management Unit. Every time a virtual address is referenced the MMU translates that virtual address to a physical address before presenting it to the memory address bus hardware.

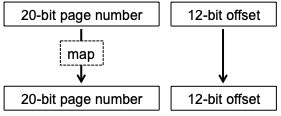

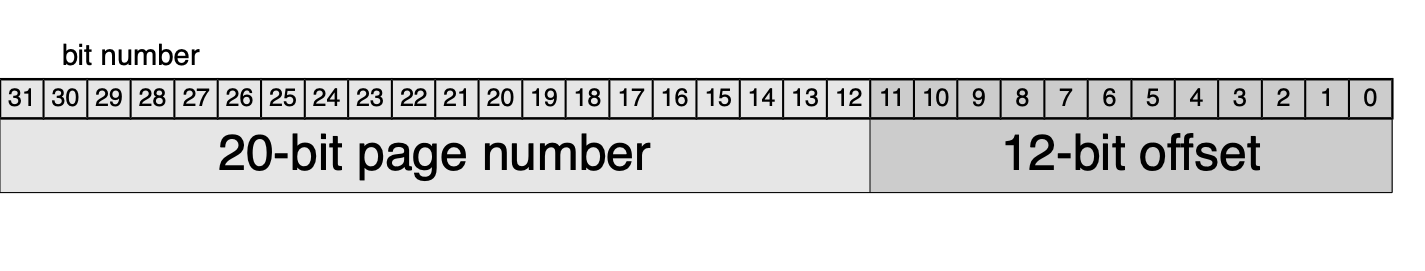

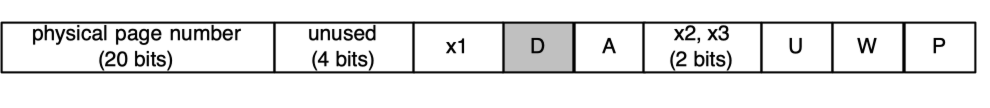

We examine a single model of address translation in detail: the one used by the original Pentium, and by any Intel-compatible CPU running in 32-bit mode. It uses 32-bit virtual addresses, 32-bit physical addresses, and a page size of 4096 bytes. Since pages are \(2^{12}\) bytes each, addresses can be divided into 20-bit page numbers and 12-bit offsets within each page, as shown in [fig:vm:fig5]{reference-type=”autoref” reference=”fig:vm:fig5”}

{height=”5.5\baselineskip”}

{height=”5.5\baselineskip”}

The Memory Management Unit (MMU) maps a 20-bit virtual page number to a 20-bit physical page number; the offset can pass through unchanged, as shown in [fig:vm:fig6]{reference-type=”autoref” reference=”fig:vm:fig6”}, giving the physical address the CPU should access.

{#fig:vm:fig5 width=”\textwidth”}

{#fig:vm:fig5 width=”\textwidth”}

Although paged address translation is far more flexible than base and bounds registers, it requires much more information. Base and bounds translation only requires two values, which can easily be held in registers in the MMU. In contrast, paged translation must be able to handle a separate mapping value for each of over a million virtual pages. (although most programs will only map a fraction of those pages) The only possible place to store the amount of information required by paged address translation is in memory itself, so the MMU uses page tables in memory to specify virtual-to-physical mappings.

22.2.1. Memory Over-Commitment and Paging#

Page faults allow data to be dynamically fetched into memory when it is needed, in the same way that the CPU dynamically fetches data from memory into the cache. This allows the operating system to over-commit memory: the sum of all process address spaces can add up to more memory than is available, although the total amount of memory mapped at any point in time must fit into RAM. This means that when a page fault occurs and a page is allocated to a process, another page (from that or another process) may need to be evicted from memory.

There are two types of regions in a user a user’s virtual address space: file-backed and anonymous.

File-backed regions are contiguous portions of a file on storage that is mapped into a user’s virtual address space. For example the exec()system call mmap()’s the text and data section of an executable file into a users address space at predetermined virtual addresses. The majority of the page frames mapped into file backed regions are read-only mappings and therefore are never modified so never need to be flushed or written back to storage. When a process exits and munmap()s its file-backed regions nothing is deleted. Everything is from some file that exist on some storage device.

Anonymous memory regions do not correspond to a file. Instead, they are the data, heap and stack regions of the user’s virtual address space. Unlike file-backed regions the page frames mapped into anonymous regions are almost always modified. Consider what would be the sense of allocating from a heap and only reading from it? For this reason all page frames mapped into anonymous regions must be written to some storage location if it is to be reclaimed. Also unlike file-backed regions all the page frames mapped into anonymous regions are deleted and therefor immediately freed. The data, heap and stack contents of a process that is exiting is on no value to any other process and therefor can be eliminated

Evicting a read-only page mapped from a file is simple: just forget the

mapping and free the page; if a fault for that page occurs later, the

page can be read back from disk. Occasionally pages are mapped

read/write from a file, when a program explicitly requests it with

mmap—in that case the OS can write any modified data back to the

file and then evict the page; again it can be paged back from disk if

needed again.

Anonymous segments such as stack and heap are typically created in

memory and do not need to be swapped; however if the system runs low on

memory it may evict anonymous pages owned by idle processes, in order to

give more memory to the currently-running ones. To do this the OS

allocates a location in “swap space” on disk: typically a dedicated swap

partition in Linux, and the PAGEFILE.sys and /var/vm/swapfile files

in Windows and OSX respectively. The data must first be written out to

that location, then the OS can store the page-to-location mapping and

release the memory page.

Hint: Linux uses/borrows the page table entry to store the location of

a swapped out page.

As long as the PTE present bit is not set the MMU hardware

will not attempt to translate, instead it will cause a page fault.

The entire PTE can be used by software when the present bit is not set.

{#fig:vm:pic106 width=”\textwidth”}

{#fig:vm:pic106 width=”\textwidth”}

22.3. Paging - Avoiding Fragmentation#

The fragmentation in [fig:vm:fig2]{reference-type=”autoref” reference=”fig:vm:fig2”} is termed external fragmentation, because the memory wasted is external to the regions allocated. This situation can be avoided by compacting memory—moving existing allocations around, thereby consolidating multiple blocks of free memory into a single large chunk. This is a slow process, requiring processes to be paused, large amounts of memory to be copied, and base+bounds registers modified to point to new locations[^2].

{height=”8\baselineskip”}

{height=”8\baselineskip”}

Instead, modern CPUs use paged address translation, which divides the physical and virtual memory spaces into fixed-sized pages, typically 4KB, and provides a flexible mapping between virtual and physical pages, as shown in [fig:vm:fig3]{reference-type=”autoref” reference=”fig:vm:fig3”}. The operating system can then maintain a list of free physical pages, and allocate them as needed. Because any combination of physical pages may be used for an allocation request, there is no external fragmentation, and a request will not fail as long as there are enough free physical pages to fulfill it.

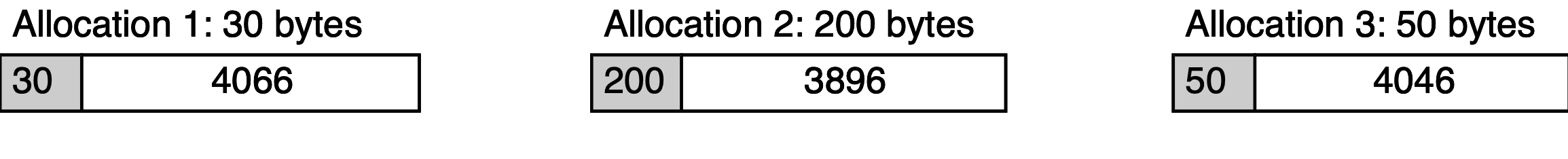

22.3.1. Internal Fragmentation#

Paging solves the problem of external fragmentation, there is no wasted space between pages of virtual memory. However, paging does suffer from

another issue, internal fragmentation, space may be wasted

inside the allocated pages. E.g. if 10 KB of memory is allocated in

4KB pages, 3 pages (a total of 12 KB) are allocated, and 2KB is wasted.

To allocate hundreds of KB in pages of 4KB this is a minor overhead:

about \(\frac{1}{2}\) a page, or 2 KB, wasted per allocation. But internal

fragmentation makes this approach inefficient for very small allocations

(e.g. the new operator in C++), as shown in

[fig:vm:fig4]{reference-type=”autoref”

reference=”fig:vm:fig4”}. (It is also one reason why even though most

CPUs support multi-megabyte or even multi-gigabyte “huge” pages, which

are slightly more efficient than 4 KB pages, they are rarely used.)

{width=”90%”}\

{width=”90%”}\